Walter TydecksA commentary on Laws of Form from Spencer-Brown |

|

Based on a contribution to the Philosophical Colloquium of the Akademie 55plus Darmstadt on March 13 and 27, 2017, Version July 12th, 2019

Table of Contents

Introduction

Motives

»Distinction is Perfect Continence«

Primary Arithmetic and Primary Algebra

Re-entry

(Processes |

Time |

Circuits)

Observer

Introduction

George Spencer-Brown (1923-2016) was from England and came from the same county of Lincolnshire as Newton. He worked early in parallel and overlapping on questions of mathematics, engineering and psychology, e.g. in World War II as a radio operator, communications engineer and hypno-pain therapist with the Royal Navy, 1950-51 at Trinity College Cambridge with Ludwig Wittgenstein (1889-1951), from 1960 with the mathematician and philosopher Bertrand Russell (1872-1970), in the field of psychotherapy and child-education with Ronald D. Laing (1927-1987), who was his therapist and whom he taught mathematics, and he was also a writer, songwriter, chess player, game inventor, glider pilot (with two world records), a quite unusual personality. 1959-61 Chief Logic Designer at Mullard Equipment, 1963-64 Advisor at British Rail, 1963-1968 projects at mathematical institutes in England, 1968-69 full-time employment as psychotherapist with hypnosis techniques, later only part-time. From 1976 temporary professorships at universities in Australia and USA. 1977-78 consulting for Xerox. In later years he became impoverished and withdrew himself, but was able to live on the property of the Marquess of Bath, a former student of his. – Laws of Form was published in 1969 thanks to promotion by Bertrand Russell. A positive review by Heinz von Foerster (1911-2002) attracted some attention, but it has remained a marginal phenomenon in mathematics, computer science and philosophy to this day. In Germany, the sociologist Niklas Luhmann (1927-1998) took up Spencer-Brown's ideas and saw them as a new approach to systems theory which, in his view, enabled a liberation from traditional philosophy. A first introduction to his logic can be found at Wikipedia. Louis Kauffman (* 1945), University of Chicago, has further developed the approach. There are references to computer science (von Foerster), cybernetics (Norbert Wiener), antipsychiatry (Laing), anthropology (Bateson), psychology (Piaget) and communication science (Watzlawick), systems science (Luhmann, Baecker), self-referential systems (Maturana, Varela). – Spencer-Brown is said to have been rather difficult to deal with personally, which probably has something to do with the fact that – as I think rightly – he saw his works on a par with those of Aristotle and Frege and was disappointed by the lack of recognition.

Accordingly, there is little secondary literature. Spencer-Brown's texts are composed extraordinarily compactly. The Laws of Form comprise 77 pages in the main text, supplemented by some prefaces and self-comments. In 1973 a conference took place at the Esalen Institute at the californian pacific coast, attended by Spencer-Brown, Bateson, von Foerster and others (American University of Masters Conference, abbreviated AUM-Conference). This already lists the source texts. Dirk Baecker sent me an unpublished typescript An Introduction to Reductors written by Spencer-Brown in 1992. In Germany, following Luhmann, there are circles at the anthroposophically influenced private university Witten/Herdecke in the Ruhr area, in Heidelberg and Munich that regularly are working on Spencer-Brown. As secondary literature, I have therefore used Luhmann's complex chapter on observation from his 1990 work Wissenschaft der Gesellschaft (Science as a Social System), in which he presents his position on Spencer-Brown, and, building on this, an anthology Kalkül der Form (Calculus of Form), edited by his student Dirk Baecker in 1993, which also contains Heinz von Foerster's review, as well as Luhmann's 1995 lecture Die neuzeitlichen Wissenschaften und die Phänomenologie (Modern Sciences and Phenomenology) in Vienna, in which he explains his philosophical position on Husserl and Spencer-Brown. In 2016, Claus-Artur Scheier, in Luhmanns Schatten (Luhmann's shadow), linked his approach to the newer French philosophy and formalized it in his own way. In Heidelberg a short introduction to the logic of Spencer-Brown by Felix Lau was published in 2005 and in Munich in 2004 an extended introduction by Tatjana Schönwälder-Kuntze, Katrin Wille and Thomas Hölscher was published in 2009. They are associated with Matthias Varga von Kibéd (* 1950), who studied with the logician Ulrich Blau (* 1940). Blau has written standard books on new directions in logic, in which, however, he does not mention Spencer-Brown. Varga von Kibéd has turned to Far Eastern traditions and has become active in the founding, introduction and marketing of new methods of systemic structures, which refer to Spencer-Brown.

In summary, Spencer-Brown has mainly influenced the theory of self-referential systems and new methods of systemic therapy, but is largely ignored and unknown in traditional philosophy, mathematics and computer science.

This contribution is also for me a first beginning and introduction and can only try to approach further philosophical questions. However, I suspect that his idea contains a potential that is far from exhausted.

Motives

In his highly self-reflexive work, Spencer-Brown stresses »There can be no distinction without motive« (LoF, 1). This seems to me to be the best approach to this unusual work. In order to understand his thoughts, it is hardly possible to rely on what has been learned and practiced in mathematics, logic or philosophy so far. On the contrary, he encourages us to unlearn much of it and to become aware again of the deeper motives which originally underlie our enthusiasm for mathematics and which have largely been lost.

– Liar paradox and negative self-reference

The first motive is surely the concern with the liar paradox ›This sentence is wrong‹. With it, the logical foundations of mathematics and thus of all natural science were fundamentally questioned. This caused a deep sense of uncertainty, that is hardly imaginable today – since we have become »accustomed« to it. At the same time, with the decline of all traditional religious and mythical beliefs, mathematics and natural science had become the only force promising identity and security. If their foundations are shaken too, every hold is in danger of being lost. Robert Musil has written about this marvellously in his novel Die Verwirrungen des Zöglings Törleß (The Confusions of Young Törless), published in 1902. Anyone who studied mathematics between 1900 and 1980 was almost inevitably confronted with Russell's paradox and was plunged into a crisis comparable to the skeptical challenge to philosophy. Only the long-lasting economic upswing in the Western countries after 1945 was able, at least for a while, to push all concerns and questions of this kind into the background and establish bare pragmatism and ordinary materialism as new values. What danger should such a paradox pose when the applications of the same science are so successful around it? In such times, Spencer-Brown's questions have little chance of resonating, since they threaten to stir up the questions that had just been laboriously swept under the carpet.

The liar paradox is the most famous example of negative self-reference: the sentence refers to itself, and it negates itself. This leads to the paradox: If this statement is right, it is wrong, and if it is wrong, it is right.

This paradox can be brought into many different forms, which has driven Ulrich Blau to the outermost ramifications with the result that there is nothing in the usual logic that leads out of this paradox.

The sentence is by no means just a logical gimmick that every teenager between the ages of 12 and 16 goes through, but in the original formulation »a Cretan says that all Cretans lie« it is of mythological importance when Cretans had to cope with the fear that they might have killed God on the island of Crete. In philosophy Hegel gave it systematic significance and it is considered the decisive characteristic of the dialectical method.

Bertrand Russell was initially Hegelian, but could not be satisfied with Hegel's speculative sentences on contradiction. For him, there had to be a formal solution that could withstand the demands of traditional logic. He succeeded only negatively with the prohibition of self-referential sentences, and he was therefore enthusiastic when Spencer-Brown was able to present a completely new formal solution.

– Imaginary numbers

Spencer-Brown was looking for a way out based on the example of imaginary numbers. The imaginary numbers are still a strange, mysterious foreign body within mathematics. Already the name ‘imaginary’ is completely untypical for the usual mathematical thinking. Are these numbers imaginary like a dream, or are they mere images, virtual objects of thought, born out of the imagery (fantasy) of the soul? What distinguishes a mathematical imago from a psychological imago? Questions of this kind certainly moved Spencer-Brown a lot after his therapy at Laing.

The expression ‘imaginary number’ goes back to Descartes and Euler. For mathematicians it is a consistent formalism to extend the space of numbers, and for engineers and physicists it is an extremely helpful tool to simplify complex equations, even if they can usually hardly answer the question of why this is possible with imaginary numbers. As a communications engineer, Spencer-Brown was undoubtedly familiar with this meaning and had learned how engineers and scientists simply calculate with such numbers, because it works well and proves itself without asking about the mathematical basics.

In the preface to the first American edition of Laws of Form in 1972, he saw an amazing relationship between imaginary numbers and the question of Russell's paradox. Russell and Whitehead wanted to exclude the threat of paradox in type theory by prohibiting self-referential elements. Spencer-Brown considered this a mistake. »Mistakenly, as it now turns out.« (LoF, ix) Spencer-Brown reports that he met Russell in 1967 and showed him how to work without this exclusion. »The Theory was, he said, the most arbitrary thing he and Whitehead had ever had to do, not really a theory but a stopgap, and he was glad to have lived long enough to see the matter resolved.« (LoF, x)

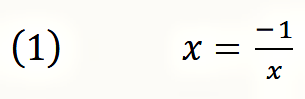

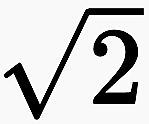

In order to define a negative self-reference within arithmetic, an equation must be established in which a variable x appears as its own reciprocal value and is negated with the minus sign:

With this equation the negative self-reference is formalized: The reciprocal stands for the self-reference, the minus sign for the negation.

At first glance, this equation looks just as harmless as if someone heard the sentence ›this sentence is wrong‹ for the first time. Everybody considers this at first a common statement about another sentence (one says »the heaven is green« and another answers «this sentence is wrong«) until its inner explosive force is seen through when it refers to itself. So formula (1) does not look unusual at first sight. The paradox it contains becomes visible when x is set to 1 or −1:

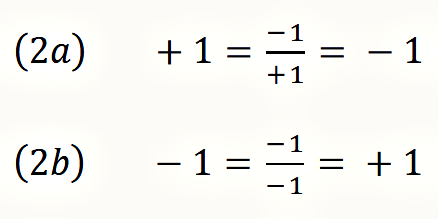

1 and −1 are the simplest possible integers, and they show that there is no integer as solution for this formula. With it, Spencer-Brown has recreated the paradox of negative self-reference within arithmetic. (Scientists trained in traditional logic and analytical philosophy, however, deny that it is permissible to transform Russel's paradox, originally formulated in set theory, in this way and to find an astonishing solution. This was my experience at the Wittgenstein Symposium 2018 in Kirchberg am Wechsel.)

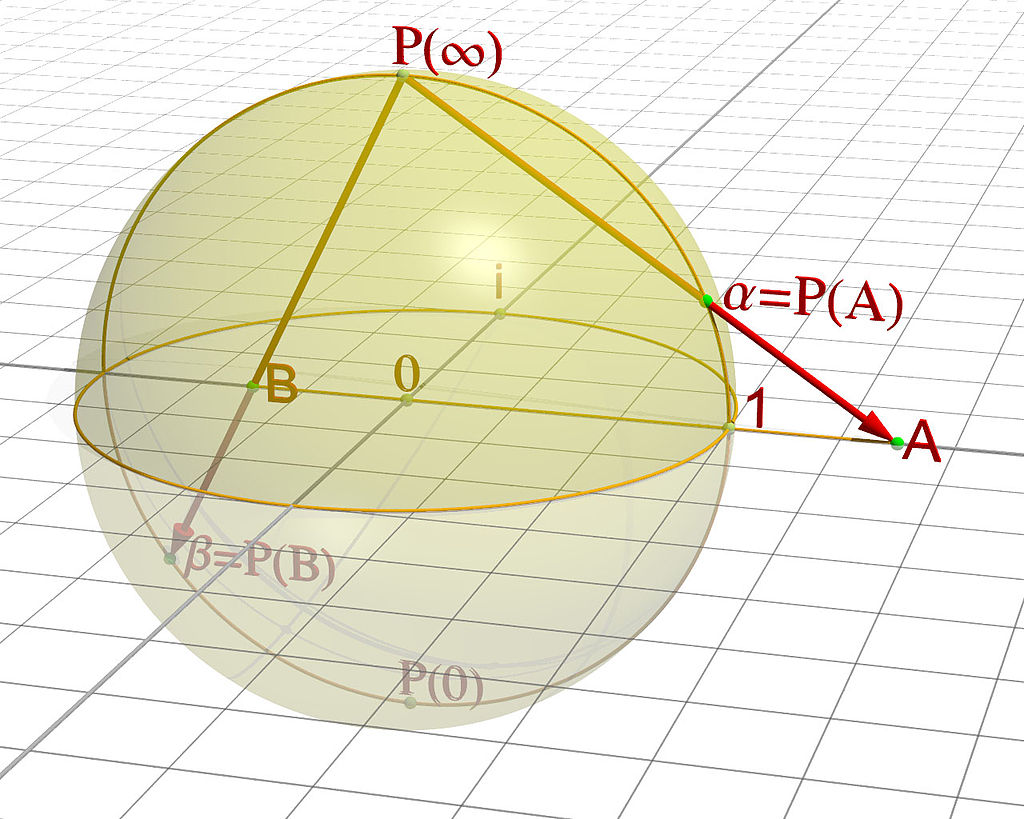

Whitehead and Russell had only seen the way out to provide and exclude questions of this kind with the indefinite, third truth value ‘meaningless’. While it is not possible to operate logically and mathematically with this truth value, a solution can be found with the imaginary numbers, which allows calculating with an additional truth value. Like the y-axis, the imaginary numbers are perpendicular to the number axis. Their unit is the newly introduced number i. Both i and −i are solutions of equation (1).

That's what Spencer-Brown is about. He wonders whether, following the example of imaginary numbers in arithmetic, imaginary numbers can also be introduced into logic with far-reaching consequences for logical thinking.

»What we do in Chapter 11 is extend the concept of Boolean algebras, which means that a valid argument may contain not just three classes of statement, but four: true, false, meaningless, and imaginary.« (LoF, xi)

– Traditional logic is both too simple and too complex.

These examples already show the new approach to logic by Spencer-Brown. He was completely dissatisfied with the traditional logic. For him it is banal, not to say childish, and at the same time unnecessarily complicated.

(1) On the one hand, traditional logic is too simple, almost childish.

Anyone who has ever read textbooks of logic with their examples understands what Spencer-Brown means. A typical logical task is: »Which of the following assertions is true: Some apples are bananas. All apples are bananas. Some bananas are not apples. Some bananas are apples.« The correct solution is hidden in the sentence »Some bananas are not apples.« No one does realize that immediatly, because no one would say so in everyday life, as everyone would spontaneously understand that sentence to imply that there are some bananas that are apples. But formally this sentence is correct. It is intended to teach an abstract understanding of logic that is independent of a prior understanding of content and a feeling for language.

Is logic nothing more than the search for funny brain teasers that everyone has to smile about? This can be expanded at will: Is a sentence like »the number 2 is yellow« true or false, and what can be deduced from this sentence? What is to be thought of the conclusion: »If John has no children, both the statement ‘All John's children sleep’ and the contrary statement ‘None of John's children sleep’ are true«? Does this refute the contradiction? Analytical philosophy continues to think up new examples according to this pattern and has been struggling with questions of this kind for decades, although Herbert Marcuse (1898-1979) had already mocked this way of thinking in the 1960s (One-dimensional Man, 1964) and basically sees in it a certain kind of education for stupidity, which unfortunately dominates almost all western philosophical faculties at which philosophers of this direction are preferentially employed. Obviously, people and especially teachers who can teach in schools with this kind of logic are to be trained on a large scale at the universities.

Because of his training in logic, Spencer-Brown had been given a job to program circuits, and quickly noticed:

»The logic questions in university degree papers were childishly easy compared with the questions I had to answer, and answer rightly, in engineering. We had to devise machinery which not only involved translation into logic sentences with as many as two hundred variables and a thousand logical constants--AND's, OR's, IMPLIES, etc.--not only had to do this, but also had to do them in a way that would be as simple as possible to make them economically possible to construct--and furthermore, since in many cases lives depended upon our getting it right, we had to be sure that we did get it right.« (AUM, Session One)

As a result, although he and the other developers could generally rely on their logical thinking, they started programming and very quickly gave up systematically designing and checking their programs according to the rules of learned logic, but were satisfied when they saw that the circuits they had programmed worked without being able to explain or prove it logically. In my experience, this is the way most programmers do it, and yet it leaves a feeling of unease that there must be another kind of logic that comes closer to the practice of programming and is more helpful. (Who hasn't struggled as a programmer with methods such as the logically structured program flowchart and dropped it at some point because the effort to replicate program extensions in methods of this kind is greater than programming and testing? With complex programs, logical methods of this kind are more complicated than the program text itself, and in reality a simple pseudo code has become established for documentation, which represents the algorithms in an easy-to-read language.)

(2) At the same time, logic is far too complex.

At the beginning of every Logic and Mathematics lecture a lot of effort is put into introducing different characters, although everyone understands them intuitively. These are own characters for constants, variables, logical operators like ∧, ∨, ¬ and quantifiers like ∀ and ∃. The various professors and textbooks outdo each other in introducing the necessary conceptual apparatus as abstractly as possible. One example is the lecture Finite Model Theory of Geschke (online in the German original):

»1.1. Structures. A vacabulary  is a finite set consisting of relation symbols P, Q, R, …, function symbols f, g, h, … and constant symbols c, d, … Each relation and function carries a natural number, its arity (Stelligkeit).

is a finite set consisting of relation symbols P, Q, R, …, function symbols f, g, h, … and constant symbols c, d, … Each relation and function carries a natural number, its arity (Stelligkeit).

Fix a vokabulary  . A structure

. A structure  for

for  (a

(a  -structure) is a set A together with

-structure) is a set A together with

(S1) relations

⊆ An for each n-digit relation symbol

⊆ An for each n-digit relation symbol  ∈

∈  ,

,

(S2) functions

: Am → A for m-digit function symbol

: Am → A for m-digit function symbol  ∈

∈  and

and

(S3) constants

∈ A for each constantsymbol

∈ A for each constantsymbol  ∈

∈  .

.

Often you identify a structure  with its underlying set A, so you write A instead of

with its underlying set A, so you write A instead of  .« (Geschke,1, my translation)

.« (Geschke,1, my translation)

Anyone who has studied mathematics and logic understands why a lecture is introduced in this way, and yet everyone has the feeling that there is something wrong with this way of approaching things. In the end, the attempt is made to deduce everything from a single teaching. That does not succeed. Instead, the view seems to have prevailed today that set theory, model theory and the theory of formal languages are more or less equal at the beginning. Set theory cannot do without a formal language, formal language uses set theory, each theory can be understood as a model, which in turn requires a formal language and set theoretical operations, as can be seen in this example. The result is the impression that it has not been possible to trace the different doctrines back to their simplest elements, and that the great abstraction and the complex technical apparatus are basically an escape to avoid this question.

For Spencer-Brown, this whole tendency is on the wrong path. His first basic decision is therefore to make a substantial reduction and thus open up an area that can be described as proto-logic or proto-mathematics (primary logic, primary arithmetic). He understands his approach as »perfect continence« (LoF, 1), a moderation and self-control in clarifying the logical foundations.

– Logic and Time

Classical logic is independent of time. Only statements that are timeless in themselves are made and linked with it. A fact or an observation always remains, even with sentences that refer to a certain point in time. A sentence like »On 24th February 2017 the first crocuses of the year 2017 blossomed in Bensheim« is still valid even if the crocuses have long since faded again. It is valid even if it proves to be a deception, because this sentence in itself is a fact of my thinking. Wittgenstein founded the Tractatus logico-philosophicus in 1921: »1 The world is everything that is the case. […] 1.13 The facts in logical space are the world.« For him, the world is the totality of all protocol propositions. Spencer-Brown had witnessed in 1950-51 how Wittgenstein wanted to go beyond this approach. It is too little to base logic on propositions of this kind and their connections.

Now logic has not stood still. Thus in 1954 Paul Lorenzen (1915-1994) developed the operative modal logic with which the differences between possibility, reality and necessity are to be logically included and formalized. The technical apparatus is as far as possible adapted to traditional logic. This direction has taken a certain upswing in connection with quantum logic. – Even in modal logic the consideration of time is still missing. At the same time, in the 1950s, this was attempted to be solved with a temporal logic. If one takes a look at the results of both modal logic and temporal logic, one will understand why this is not what Spencer-Brown was looking for. He took a completely different approach from the practical experience that, in programming, the equal sign is understood context-dependently as an assignment or as an agreement.

Elena Esposito has pointed out this aspect (Esposito in Baecker Kalkül der Form, 108-110). When programming, it is neither a contradiction nor a paradox when you write:

(4a) i = i + 1

(4b) a = f(a)

In the programming line (4a), the character i does not stand for a variable, as in a mathematical equation, for which an arbitrary number can be used, but which then has to be included in the course of the calculation. Instead, the character i stands for a storage location, the contents of which can be changed during the execution of the program. With both allocations, the result is on the left side later than the arithmetic operation on the right side. The assignment ›i = i + 1‹ is one of the most frequently used programming lines, if in a loop in the course of a temporal repetition it is counted, how often the loop was passed through. – The statement ›a = f(a)‹ is also a temporal operation that reads the current value stored from storage location a, recalculates it with a function f(a), and then reassigns the result to memory location a. The result of this operation is then used to calculate the value. The a on the left side therefore usually has a different value than the a on the right side. If, for example, in an asset management system, the value of an asset is located at storage location a and recalculated with function f, the content of storage location a changes as a result. The »old a« is located on the right-hand side and the »new a« calculated with function f is located on the left-hand side. This difficulty in understanding arises because abbreviations are used in programming: The character a stands both for the storage location and for the value stored at this storage location.

To explain what happens during programming and to find a suitable logic for it is in my opinion one of the strongest motives of Spencer-Brown. If his work has so far been almost completely ignored by computer science, it only shows to what extent computer science is incapable of finding something like a transcendental turn from its own actions, as Kant had done within philosophy, and how it looked for my impression of Spencer-Brown for programming. Especially for a mathematician and computer scientist, Spencer-Brown's book is not easy to read. Although he is more familiar than others with formal considerations of this kind, he must free himself from entrenched prejudices. He must – as Spencer-Brown said – unlearn a lot, and approach the unlearning step by step, in order not to get into too much confusion already at the first step.

Spencer-Brown answered a question from John Lilly (1915-2001) (neurophysiologist, dolphin research, study of drugs and their effects like LSD, influence on New Age):

»LILLY: Have you formulated or recommended an order of unlearning?

SPENCER BROWN: I can't remember having done so. I think that, having considered the question, the order of unlearning is different for each person, because what we unlearn first is what we learned last. I guess that's the order of unlearning. If you dig too deep too soon you will have a catastrophe; because if you unlearn something really important, in the sense of deeply imported in you, without first unlearning the more superficial importation, then you undermine the whole structure of your personality, which will collapse.

Therefore, you proceed by stages, the last learned is the first unlearned, and this way you could proceed safely.« (AUM, first session)

– The influence of the observer

Kant's transcendental turn is a new kind of self-observation with which thinking examines its own actions. Kant was aware that he was following with it a turning point that had already occurred in astronomy with the Copernican turn, when he had become clear how the world view is dependent on the respective position of the observer. The world presents itself differently when it is no longer seen in thought from the earth, but from the sun. Materially everything remains the same, but the movements of the sun, the planets and the moon are mathematically much easier to bring into equations of motion. They indicate patterns (Kepler's laws), which cannot be seen from the earth with bare eyes.

With Einstein, the speed at which an observer moves plays a role in how he perceives other movements. In quantum mechanics it is feared that nothing can be measured as it is because it is changed by the measurement, i.e. the measuring process interferes with the measurement.

The observer is of even greater importance for psychology and sociology, and it is not without reason that Spencer-Brown's teachings have been taken up there. Kant considered scientific psychology to be impossible. For him it was questionable whether chemistry could ever become a science with mathematical methods, but for psychology he considered that to be completely impossible. Every thinking being will notice when it is observed and react accordingly. Therefore, the observer can never be sure that the other is only behaving the way he does because he's attuned to him. Psychoanalysis has partially taken this into account since it is aware of the phenomenon of the transference (Übertragung) recognized by Freud, when a patient adapts unnoticed to the analyst's expectations and the analyst behaves unconsciously as the patient expects of him (countertransference, Gegenübertragung).

»But not even as a systematic art of analysis, or experimental doctrine, can it (psychology, t.) ever approach chemistry, because in it the manifold of internal observation is only separated in thought, but cannot be kept separate and be connected again at pleasure; still less is another thinking subject amenable to investigations of this kind, and even the observation itself, alters and distorts the state of the object observed. It can never therefore be anything more than an historical, and as such, as far as possible systematic natural doctrine of the internal sense, i.e. a natural description of the soul, but not a science of the soul, nor even a psychological experimental doctrine. « (Kant The Metaphysical Foundations of Natural Science, Preface, MAN, AA 04:471.22-32, translated by Ernest Belfort Bax)

Has this been confirmed for Spencer-Brown in his own psychotherapeutic experience? In contrast to Kant's belief, can patterns be recognized in the behavior of the observer and the observed in psychological practice that go beyond the respective personality? I do not know whether and how intensively Spencer-Brown was known about the teachings of Husserl, Heidegger, Sartre and others, who have investigated this question. Luhmann and Scheier understand the logic of Spencer-Brown from this context.

Here the question of re-entry takes on a new meaning. In psychological and sociological practice, re-entry is not simply a possibly purely mechanical resonance, feedback, or feedback that, as in self-referential systems, takes place almost automatically, but the observer receives feedback from the observed on his behavior and reacts to it.

– Process and result

When Spencer-Brown emphasizes pragmatic action from his professional experience, he questions the traditional relationship between process and result. Since traditional logic knows only timeless statements that are always valid and never change, it has not examined the logic of processes. For Aristotle, logic and physics were clearly separated. He systematically examined the timeless statements in his both works on the Analytics (Analytica protera and Analytiká hystera), whereas in physics, as the doctrine of ephemeral things and beings subject to Coming to Be and Passing Away (the title of another text from Aristotle, Peri geneseôs kai phthoras), time, movement and change occur. In his Physics he introduced with energeia and entelecheia new concepts that apply only to the course of processes and consciously leave the framework of the Analytics and come close to a new logical understanding. (They have since become basic concepts in physics and biology as energy and entelechy. Heidegger had described them as existentials (Existentialien), but considered it impossible to find a formalization comparable to traditional logic.)

Spencer-Brown wants to base logic on concepts in which process and result are not yet clearly separated. He shares this concern with Hegel, who has systematically used such concepts. 'Beginning': This can be both the process of beginning and the result that was started. Similar to determination, development, measurement etc.: A measurement can be the process of measuring, as well as the value that was measured. Determination can be the process of determining as well as the result that something is so and not differently determined.

Spencer-Brown bases his logic with distinction and indication on similar terms. This can be the process of naming and distinguishing as well as the result when a distinction is made and a name is given. It is the process of distinguishing something, and in the result the sign (mark) and the value that is given to the something with the distinction.

If one begins with concepts in which the distinction between process and result is consciously kept open, then there must be a clearly recognizable and comprehensible way within logic to introduce time and movement there and to separate this openness into its two components. I see this motive especially in the distinction between primary arithmetic and primary algebra as in the introduction of re-entry and the time given with re-entry.

– The signs and their medium

If it is unusual for a mathematician to accept the changed meaning of variables in the temporal course of assignment in the programming and logic of Spencer-Brown on the one hand and the timelessly conceived mathematical equations on the other hand, it requires an even more radical rethinking to regard the relationship between sign and medium as a reciprocal process. It is almost absurd for a mathematician to assume that the medium in which the mathematical signs are inscribed could influence the signs and their operations. Basically, every mathematician sees the signs of his formulas completely independently of the medium in which they are represented, preferably as objects in an entirely immaterial, purely intellectual space. If he needs a blackboard or a sheet of paper or a screen to write down his formulas there, these are only tools without influence on the contents of calculation and proof. It is possible that a formula is made blurred on a bad background and is therefore misread with results that are misleading. But this is nothing more than a disruptive factor that can ideally be excluded. The statements of mathematical propositions apply in principle independently of the medium in which they are written.

This attitude was shaken in 1948 by the work of Claude Shannon (1916-2001) on the mathematical foundations in information theory. Shannon was like Spencer-Brown a mathematician and electrical engineer. In his study of data transmissions, he has demonstrated how any medium generates background noise that interferes with the transmitted characters. To this day, mathematics has not perceived or not wanted to perceive the elementary consequences of this for mathematics and logic. To this day, mathematics is regarded as a teaching that is independent of the medium in which it is written and through which it is transmitted. Nobody can imagine that the medium could have an influence on the signs and their statements. Mathematics is regarded as a teaching that is developed in a basically motionless mind.

For Spencer-Brown, this relativizes itself. For Spencer-Brown, the design of circuits added a fundamentally new experience that goes far beyond mere programming. At first glance, schematics are nothing more than a graphic, descriptive language of formulas whose logical status should resemble the signs of mathematics and ultimately correspond to them. But for him, the circuits that he designed and worked with showed that each circuit contains its own dynamics that support the statements and results designed with the circuit. It is possible to describe in words and formulas which input a circuit processes and to which output it leads, but it is not possible to fully explain how these results come about. Obviously, the circuit contains a kind of self-organization that influences the result by itself. Whoever designs a circuit has a clear target in mind and can design and realize the circuit, but he cannot completely predict what will happen in the circuit. On the contrary, he relies on the circuit itself to stabilise, giving the result the expected certainty. Even if on closer examination many of the processes that have led to the desired result in the circuit can be explained, there is always a residual. This property does not result from the graphic form and its formal elements, but from the medium in which the circuit is realized. Spencer-Brown compares it with chemistry: chemical formulas can never be used to describe completely what happens in a chemical reaction. The real chemical process has its own dynamics that can always lead to surprises despite all the precautions taken. From today's perspective, cell biology can be cited as another example. It has been shown that even the complete decoding of the DNA does not lead to a comprehensive prediction of the processes in a cell. All these examples are texts or text-like forms (the schematic diagram, the chemical reaction equations, the DNA code) which are fundamentally incomplete and cannot completely represent the medium in which they are realized. Is this experience in a kind of limit process also to be transferred to mathematics and logic, or at least to be taken into account there?

This leads Spencer-Brown to the fundamental question of the relationship between the signs and the medium. The result is certainly not that the mathematics known today becomes »wrong« because it has overlooked its medium and its interaction. Rather, the result is that mathematics must be understood before the horizon of an overarching doctrine of medium and sign, from which the special status of mathematics and the conditions for why it applies in the way we know it can be understood.

For me, this is the most difficult motif of Spencer-Brown to understand and at the same time the one with the most serious consequences. If a logic is designed that self-reflectively understands its own medium, in which it is founded, inscribed and transmitted, then this can lead to the design of a Logic of medial Modernity that does justice to man's constitution today. Scheier in Luhmanns Schatten in particular pointed out the importance of the medium for understanding our culture. Spencer-Brown can help to work this out further.

– Neural Networks

While the paradoxes and antinomies of Russell and Gödel are intensively discussed in philosophy, the development of a new logic, which was based on an upswing in neurophysiological research and led to neural circuits, remained largely unnoticed. Only with spectacular successes such as the Go playing program AlphaGo from 2015-16 does this change abruptly. Programs like these are the result of more than 100 years of development. I see Spencer-Brown in this tradition too.

Warren McCulloch (1898-1969) played a key role. He studied philosophy, psychology and medicine, worked primarily as a neurophysiologist and sees himself both in the tradition of American philosophy, which has always been practically oriented and pragmatically thinking, and in the neurophysiology that emerged around 1900. Of American philosophy, he names Charles Sanders Peirce (1839-1914) and Josiah Willard Gibbs (1839-1903) in particular, then, based on them, the discussion during the 1940s with Norbert Wiener (1894-1964), Arturo Rosenblueth (1900-1970), John von Neumann (1903-1957) and Walter Pitts (1923-1969). Most of them certainly saw themselves more as specialists than philosophers, because they no longer expected any stimulating impulses from the philosophy of their time. Many of them deliberately remained outside the academic field or were not accepted there (e.g. Norbert Wiener). If, nevertheless, deep philosophical questions and conversations did arise and were discussed, it is mainly thanks to McCulloch.

For decades McCulloch had been searching for a new logic with ever new approaches that went beyond the tradition of Aristotle to Frege and Russell. In 1920 he wanted to extend the copula 'is', which appears in all classical statements according to the pattern ›S is P‹, into three groups of verbs: (i) verbs that lead from an object to a subject and come from the past, while the copula 'is' occurs only in a timeless present; (ii) verbs that lead from a subject to an object and thus into the future; (iii) verbs that describe an ongoing state. But this attempt did not lead to success. This was followed by efforts to search for the smallest units in psychic thought in a similar way as physics had done with electrons, photons and other physical elements: He called them psychons. They should be connected in a way other than the traditional objects and relationships of logic in order to represent the psychic events and the diseases examined by psychiatry and to find therapies. This, too, did not lead to the expected results. The turning point came when he became familiar with the work of neurophysiology and took an active part in it as a researcher. From the very beginning, he saw the possibility of giving Kant's transcendental logic a new ground with them (when the network of neurons from the sense organs to the brain takes the place of transcendental apperception and explains the pre-influence of all sensual stimuli before it comes to thinking in ideas and concepts). At the end of his life he published an anthology of important works with the programmatic title Embodiments of Mind. From 1946 to 1953 he was a leading participant in the Macy conferences. Many ideas had arisen during the Second World War within the framework of the Office of Strategic Services (OSS) founded in 1941 by US President Roosevelt, in which Marcuse, Bateson, Sweezy and other representatives of the later New Left also participated. After 1945, the OSS gave rise to the CIA on the one hand, and on the other, many participants sought to continue their work within the framework of a civilian application. They were convinced that they were at the beginning of a completely new development.

Already in 1931 McCulloch had heard about the new findings of Kurt Gödel (1906-1978) and from the beginning he dealt with the results of Alan Turing (1912-1954), which were published since 1937. That was the beginning of a completely new kind of logic, and it is undoubtedly McCulloch's special achievement to have linked the diversity of these currents together.

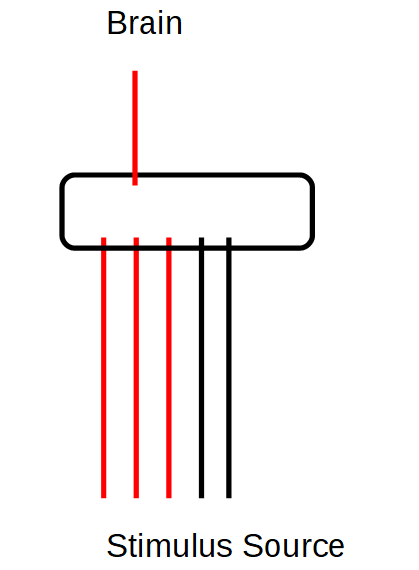

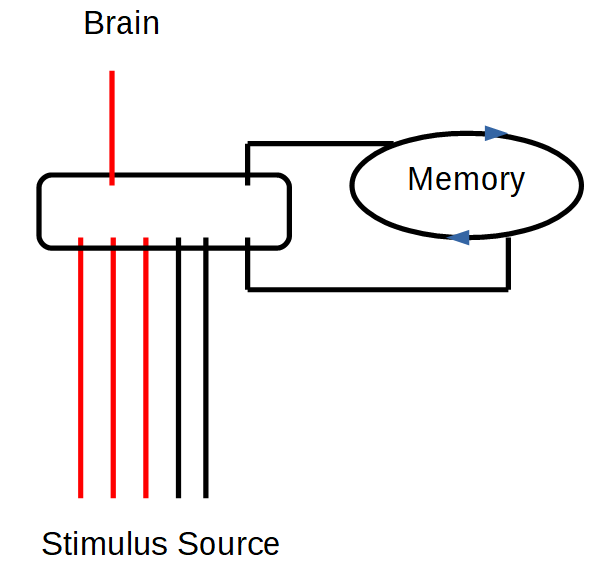

His breakthrough came with neurophysiology. It began with the physician Ramón y Cajal (1852-1934). He draw the first diagrams of sensory organs and the nerve pathways they emanated from, which he had obtained from thorough studies of the nervous system and thorough dissections of frogs and other animals. The physician, psychiatrist and psychoanalyst Lawrence Kubie (1896-1973) used their example 1930-41 to describe for the first time circuits (closed reverberating circuits) in order to understand memory.

For me, two results are particularly important with regard to Spencer-Brown: Two important principles were recognized in the example of the nerve tracts from the frog's eye to the frog's brain, which have an indirect effect on the Laws of Form.

Principle of Additivity: Only when a sufficient number of neural pathways report an input is it passed on to the brain. Individual, isolated inputs, on the other hand, are ignored. If the selection does not work, the brain is flooded and overtaxed. This is one cause of epilepsy.

Memory: In addition, a spinning top is set up when a threshold is exceeded. There, information runs in a circle and each return reminds us that there was originally a stimulus that triggered this cycle. The activated circle remembers that something has happened. It serves as additional input in the nervous system. It not only indicates that enough neural pathways have been stimulated by the same event, but that this event has already taken place in the past. This is the technical basis and realization of a learning system.

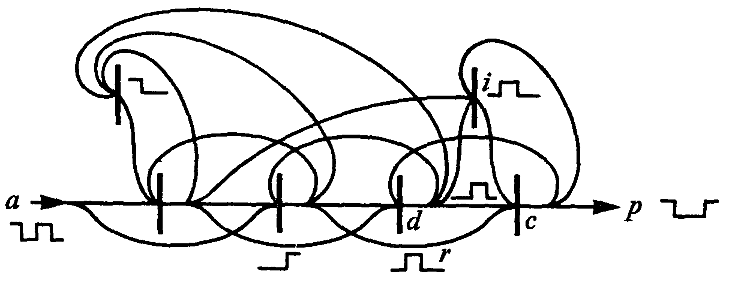

In a similar way, Spencer-Brown speaks of the memory function and represents it through closed circuits. Spencer-Brown has sought a more abstract level that fits within the framework of logic. I don't know if he explicitly referred to this tradition, but it is clear to me how he stands in this tradition. He was no longer able to elaborate these ideas, but they appear in decisive places in the Laws of Form. In England he may have been more impressed by the Homeostat introduced in 1948 than by neurophysiology. The developer was the English psychiatrist and biochemist William Ross Ashby (1903-1972). Spencer-Brown has in a strange way brought elements of the new findings of neurophysiology in his remarks on re-entry and circuits, without it becoming clear which intuition had led him.

McCulloch had in 1945, in a short but fundamental article The heterarchy of values determined by the topology of nervous nets, pointed to fundamental philosophical aspects of the new circuits that arose in the context of the first ideas for neural networks. In a network there is no longer a controlling, hierarchical centre, but a heterarchy of concurrent and circularly interconnected developments. This seems to me to be the basic idea that Niklas Luhmann (1927-1998) has taken up in his work on systems theory – even if he only makes very marginal reference to McCulloch (as in Soziale Systeme, 565 and Wissenschaft der Gesellschaft, 365), and why he hoped with Spencer-Brown to be able to give his systems theory a new logical basis that differs from traditional subject philosophies and their paradoxes.

– The Gordian knot

Is it possible to solve all these questions together as in a Gordian knot? That seems to me to be Spencer-Brown's motive.

Spencer-Brown's motto is a verse from Laozi Tao Te Ching. Laozi begins his writing with four verses, of which Spencer-Brown has chosen the third verse.

Wu ming tian di zhi shi

Nothing/Without Name Heaven Earth from Beginning

»Since Chinese is an isolating language and not an inflectional language, the Chinese sentence has an ambiguity that is lost in Western languages through the choice of a word type. Ambiguity depends on the grammatical role of the first word 'wu:' 'not, without, nothing'«. (SWH, 65, my translation)

The verse can therefore be translated in two ways:

– Without name is the beginning of heaven and earth

– 'Nothing' is the name of the beginning of heaven and earth.

All four verses are related:

The way that is really the way is different from the unchangeable way.

The names that are real names are other than unchangeable names.

Nameless/Nothing is the beginning of heaven and earth.

The name is the mother of ten thousand things. (quotes SWH, 65, my translation)

»Distinction is Perfect Continence«

Somewhere, Spencer-Brown's logic must begin with basic concepts that cannot be further questioned. He searches for them below the usual mathematics and language. Before every speaking and arithmetic, there are indication and distinction. With them he wants to establish a proto-logic and proto-mathematics (primary logic, primary mathematics), a calculus of indication and distinction.

»We take as given the idea of distinction and the idea of indication. We take, therefore, the form of distinction for the form.« (LoF, 1)

Without having made distinctions, neither language nor calculations are possible: In order to be able to calculate and to form sentences, the characters used in calculating and writing must be differentiated from each other. In an equation like ›2 + 3 = 5‹ operands (in this example the numbers 2, 3 and 5) and operators (operation signs) (in this example + and = for addition and equation) are linked and a statement is formed with them. Similar it is in the language. In a proposition like ›S is p‹ S and p are operands and the copula ‘is’ an operator. The signs 2, 3, 5, = and + must be distinguishable from each other, and it must be assumed that they do not change during the calculation. If this were not possible, then the equation would be meaningless. Therefore it is obvious to start with differentiation as the proto-operation before the operations of calculating and speaking and to develop the usual logic and arithmetic from it step by step.

For Spencer-Brown there must be a sign that this level meets and precedes the known signs for operands (numbers and letters) and linking verbs (like the copula ‘is’ and arithmetic symbols like +). He goes even a step beyond: He looks for a sign that precedes the differentiation into operands and operators and can serve as both operator and operand. For him, this elementary sign is the cross:

This sign has a multiple meaning:

– Execution of an elementary operation (drawing this character, draw a distinction)

– Highlighting an interior (mark)

– Marking of an inner area (marked space) (asymmetry of inside and outside)

– Drawing a boundary (boundary with separate sides)

– Distinguishing a ground from the sign drawn in the ground (ground, medium)

– Calling the border the sign ⃧ (indication, call)

In the latter meaning, it is self-referential: With its outward appearance, it clearly shows the difference between an inside and an outside, and at the same time this sign is the name for this difference. This gives value to the inside.

The amazing thing is that with this single character not only the operands but also the operators can be represented. Unlike traditional logic and mathematics, Spencer-Brown does not distinguish between signs for operands and operators, but implicitly defines the operations (the calculation and the formation of statements) by the mere arrangement of the operands: These are the two operations 'repetition' and 'nesting'. With them the further logic can be constructed completely.

All further signs are constructed step by step from these two signs and their two arrangement possibilities, just as in Euclidean geometry all constructions result from straight lines and circles.

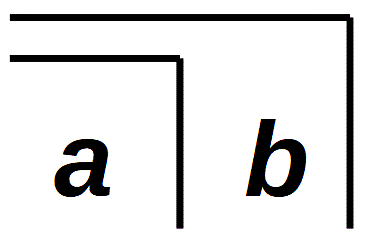

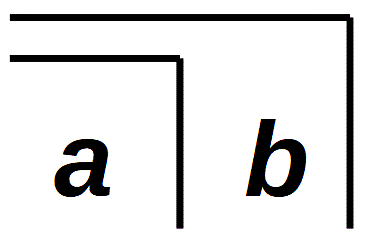

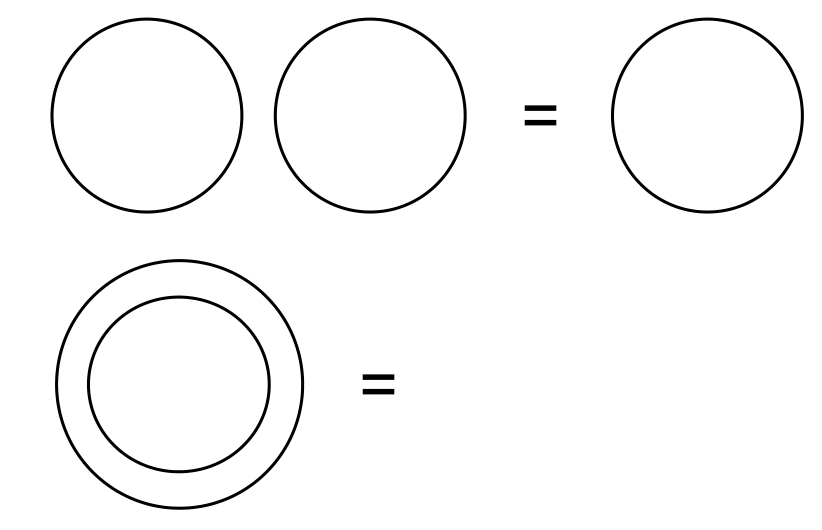

»Axiom 1: The law of calling.

The value of a call made again is the value of the call.« (LoF, 1)

⃧ ⃧ = ⃧

Read: If something is mentioned twice by its name, the name does not change.

»Axiom 2: The law of crossing

The value of crossing made again is not the value of the crossing« (LoF, 2)

= .

= .

Read: If the boundary is crossed twice, the initial state is restored. The repetition of the crossing has a different value than the simple crossing. This is because there is a reversal in between. With crossing the side is changed, with recrossing this action is undone.

The introduction of these two axioms may be more understandable if one remembers that Spencer-Brown has been starting from circuits. (1) If a switch is opened in a circuit on a connection, the distance is interrupted and changes from the 'on' state to the 'off' state. If several switches are opened, the 'off' state is retained. The result is the same as opening one or more switches. (2) If a switch is first opened and then closed again, the original state 'on' is restored.

Axiom 2 implicitly introduces the medium: If the Recrossing is understood as reflection, with which in the literal sense a border crossing is reflected (thrown back), then Axiom 2 results in the empty space. It is what remains when something is undertaken and undone. What remains behind is no longer simply nothing: It is the space that has arisen through the double movement of becoming and passing: the border was drawn first. That is becoming. Then the becoming was undone by a passing. What remains is the ground on which this movement of becoming and passing away took place. – In other words, an observer may not see anything, but he can remember the movement of crossing and recrossing. A trace on which the memory is based can remain in his memory. Compare Hegel's statements about the sublation of becoming:

»This result is a vanishedness, but it is not nothing; as such, it would be only a relapse into one of the already sublated determinations and not the result of nothing and of being. It is the unity of being and nothing that has become quiescent simplicity. But this quiescent simplicity is being, yet no longer for itself but as determination of the whole.« HW 5.113, translated by George di Giovanni)

With the becoming and passing of the boundary in crossing and recrossing, time is implicitly set from the beginning. It is already contained in the original prompt »draw a distinction«. Spencer-Brown is even more consistent than Hegel: He not only chooses, like Hegel, terms that simultaneously describe a movement and its result (differentiation, designation can be understood both as the process of differentiating and designating as well as the result, that is the differentiation and designation achieved by the process of the differentiating and designating), but with the cross he chooses a sign that simultaneously describes the operation (movement) as well as the operand.

Remark: In the two operations of recalling and recrossing I formally see the two movements of continuing and turnabout (reversal, Umkehrung) which Hegel distinguished (HW 5.446, 453) which are central for me for the understanding of absolute indifference as well as the transition from the doctrine of being to the doctrine of essence and further the concept of idea.

But how should the sign be named in German? Spencer-Brown introduces it as »crossing the boundary« and as »calling« (LoF, 1), and after the first drawing calls it a »mark« (LoF, 3). In German translations the English word 'cross' is sometimes retained or a neutral expression like 'token', 'mark' or 'character' is chosen. The literal German translation 'Kreuz' (cross) is mostly avoided, because the outward appearance is not a cross  , but an angle. However, an angle is in typically intuition seen as opened to the right and presented with an opening of less than 90°, as is the angle sign in the HTML markup language: ∠. The word 'hook' is also possible. However, all this does not apply to Spencer-Brown's double meaning of a sign and a movement (cross, crossing). Also the German word 'Quere' (transverse) is misleading, because a transverse is rather a sign like \ or / that runs transversely, and by traversing is often meant another kind of movement, such as traversing an empty square, a river, or possibly a shortcut if a rectangle is traversed along the diagonal. Despite reservations, the name 'hook' is the most catchy for me in conversations and demonstrations on a blackboard, because it best hits the outer shape. Therefore I use it in German original. In the English translation I keep the expression 'cross' despite all reservations, because it is the most common expression in the works on Spencer-Brown.

, but an angle. However, an angle is in typically intuition seen as opened to the right and presented with an opening of less than 90°, as is the angle sign in the HTML markup language: ∠. The word 'hook' is also possible. However, all this does not apply to Spencer-Brown's double meaning of a sign and a movement (cross, crossing). Also the German word 'Quere' (transverse) is misleading, because a transverse is rather a sign like \ or / that runs transversely, and by traversing is often meant another kind of movement, such as traversing an empty square, a river, or possibly a shortcut if a rectangle is traversed along the diagonal. Despite reservations, the name 'hook' is the most catchy for me in conversations and demonstrations on a blackboard, because it best hits the outer shape. Therefore I use it in German original. In the English translation I keep the expression 'cross' despite all reservations, because it is the most common expression in the works on Spencer-Brown.

For the other sign, which consists only of a void, Spencer-Brown has long searched for a suitable name. Only in the 2nd edition of the German translation of 1999 did he introduce the term 'ock' and the sign Ɔ.

»Shortly after it was first published, I received a telephone call from a girl named Juliet who, while expressing her enthusiasm for what I had done, also expressed her frustration at not being able to speak the empty space over the telephone. [...] She was absolutely right, and I subsequently invented the word ock (from the Indo-European okw = the eye), symbolized by a rotated lower-case letter Ɔ, to indicate in a speakable form the universal recessive constant that all systems have in common. Ordinary number systems naturally have two ocks, zero for addition and the unit for multiplication. [...] But in this book, in which its true nature was made clear for the first time, it was important to leave the ock nameless and empty, because it was the next one I could reach to emphasize that it is nothing at all, not even empty. His invention was more powerful than the invention of zero, and sent shock waves through the entire mathematical community, which have not yet subsided.« (Spencer-Brown, 1999, xvf, quoted by Rathgeb, 118, my translation)

In the secondary literature on Spencer-Brown, this has not been taken into account to this day, with the exception of Rathgeb, but I will follow him as it seems to me the easiest and best way to name the two basic signs and in words.

Summary: Spencer-Brown explains the intention of these first steps:

»Definition

Distinction is perfect continence.« (LoF, 1)

What is meant by the unusual word 'continence': SWH comment: (a) It can mean abstinence, moderation and self-control (continent as the opposite of incontinent). In this sense continence ensures that something does not diverge and disperses, becomes frayed and loses its own identity. (b) And it can mean cohesion in the sense of the continuum, whereby for example in geometry the infinity of points is held together on a line. (SWH, 70) This seems to me to meet Spencer-Brown's concern very well.

»Distinction is Perfect Continence«

Spencer-Brown presented and discussed his understanding of arithmetic and algebra at the AUM conference in Session Two on March 19th, 1973.

– Arithmetic is demonstrated on physical objects. Already in kindergarten or primary school it is learned by examples like ›3 apples and 2 apples are 5 apples‹. Everyone can take the two smaller amounts of 2 and 3 apples, combine them and recount that there are 5 apples now. Examples of this kind are so intuitive and striking that hardly anyone notices what is implicitly assumed and learned as if incidentally. The individual apples remain independent and do not change through counting. If they would react and merge with each other like in a chemical process, or transform or divide into other objects, counting would be impossible. Spencer-Brown therefore formulates his own rules to ensure this (what he calls canons).

Only gradually does this become abstract arithmetic with numbers, in which no explicit reference is made to specific objects. The numbers themselves are not physical objects. At school this is implicitly learned, and it is still an undecided question whether numbers exist independently of counted objects, whether they are one of the properties of counted objects, or whether numbers exist only in the mind of rational beings such as humans, who have introduced numbers in the course of their development and can operate with them in thought. Philosophers and logicians have never reached agreement on this. I agree with Aristotle, for whom numbers consist only in thinking.

In what way can be spoken of proto numbers in proto arithmetic, and how can they be illustrated in a similar way to counting apples on concrete objects? Spencer-Brown goes one level deeper and understands the two signs cross ⃧ and ock Ɔ in a double meaning, in which they serve both as numbers and as operation signs. The usual arithmetic knows expressions like ›7 + 5‹ or ›120 − 13‹. For Spencer-Brown these are character strings, which are formed according to fixed rules from a certain character set, which consists of the digits 0, 1, 2, …, 9 and the operation signs like + and − in the decimal system. In the proto-arithmetic, it reduces the character set to the two characters cross ⃧ and ock Ɔ, from which the arithmetic expressions are composed. Strictly speaking, they are neither proto-digits nor proto-numbers, but mathematical proto-characters, from which mathematical expressions are formed, in which they get both the meaning of digits (and the numbers composed of them according to the positional notation) and of operation signs. In order not to complicate the way of expression too much, one can still speak of proto-numbers, even if this is literally wrong. Spencer-Brown says that proto-arithmetic calculates with them. From the two characters cross ⃧ and ock Ɔ more complex characters are composed according to the two rules of repetition and nesting. In an inaccurate sense, these are the proto-numbers. Spencer-Brown does not speak of arithmetic operations, but of transformations (»primitive equations«, »procedue«, »changes«; LoF, 10f) and introduces the sign ⇀ (LoF, 8). It means that the second expression is derived from the first expression by a transformation (LoF, 11).

The ordinary numbers are illustrated and understood as abstraction from counting objects. With the two signs cross ⃧ and ock Ɔ, objects are not counted in the first step, but just differentiated. Distinguishing precedes counting. If objects are not distinguished (sortal), they cannot be counted. The cross ⃧ describes that there is a difference from something to something else, and the ock Ɔ describes the background against which the boundary of something stands out from its exterior. The process of abstraction, however, remains similar: just as the understanding of numbers is gained by counting objects, the understanding of the two signs cross ⃧ and ock Ɔ is gained by distinguishing objects from each other and against the respective background.

Just as conventional arithmetic introduces the natural numbers 0, 1, 2, 3, …, 9, and forms numbers and calculates with them, Spencer-Brown regards his primary arithmetic as a simplified and preceding arithmetic, which only knows the two signs cross ⃧ and ock Ɔ. With these two signs we shall calculate in a comparable way, as we are familiar with ordinary arithmetic, in order to recognize deeper arithmetic connections, from which the ordinary arithmetic can be derived step by step.

– The arithmetic takes into account the individuality of the respective numbers. In number theory every single number is something special. What has been proven in arithmetic for a certain number cannot be easily transferred to the other numbers. Only algebra will study rules that apply to all numbers independent of their individual properties. This is also the case with proto-arithmetic, with which the special features of the two signs cross ⃧ and ock Ɔ as well as the signs composed of them are examined.

Spencer-Brown wants to return to the original understanding of numbers, which is contained in arithmetic and precedes algebra. If you learn to calculate and use numbers, you will initially appreciate the peculiarities of each number. Each new number has something of its own. For example, children learn how the two and the multiples of two, the three and the multiples of three, the five and the multiples of five each have something special. So for example all multiples of five have as last digit only the 0 or the 5 (5, 10, 15, 20, …), with the multiples of 3 the checksum is divisible by 3 (the checksum is the sum of all digits, e.g. the checksum of 75342 is calculated as ›7 + 5 + 3 + 4 + 2 = 21‹, and since 21 is divisible by 3, 75342 is divisible by 3 too). Another example is the number 6, which is identical with the sum of its divisors ›6 = 1 · 2 · 3 = 1 + 2 + 3‹. Thus, specific properties can be recognized for each number. Everyone who is enthusiastic about mathematics is fascinated by playing with numbers and thinking about the peculiarities of the individual numbers (thaumazein, or ‘wondering’ from ‘wonder’) and has his favourite numbers and numbers that somehow seem strange to him. Even if an outsider may hardly be able to understand it, this way of playing with numbers shows me the same spontaneous joy and attitude as with canon singing. The apt example comes from the Piaget school: I say a number and you say a number. Whoever said the greater number won. Both have to laugh when they understand the trick of why the second player wins all the time, and yet repeat the same game over and over again, with continuing pleasure. For me, this is the first and simplest example of an arithmetic proof idea with which the children without knowing it found a procedure for proving that there are infinitely many numbers.

The result are rules like the so-called donkey bridges, which apply to certain numbers and are not transferable to other numbers. Everyone will have his or her favourite calculation methods, which he or she succeeds in using particularly well, comparable to their favourite colours. Spencer-Brown wants to return to this original (naive, magical) way of handling numbers, because it is only from this that he will be able to see the formal way in which numbers were operated in the 20th century in a new light.

In Piaget's view, arithmetic belongs to the second phase of symbolic and pre-conceptual thought, which he wants to preserve in its peculiarity and uniqueness. The transition to algebra will therefore in many ways be similar to the transition to operational arithmetic in the III. phase (at the age of 7 to 12 years).

– Within arithmetic there are propositions (theorems). Theorems for Spencer-Brown are ideas that are found in individual, unique elements. They show mathematics in the stricter (proper) sense. Algebra, on the other hand, is formulated for variables independent of the individuality of the values used in each of the variables. Therefore proto-arithmetic for Spencer-Brown cannot formally derive theorems from the axioms, but only intuitively grasp them and only in the second step prove them with formal methods and deduce consequences. On the other hand, the approach of algebra can in principle be adopted by computers. In the 20th century, mathematicians like Russell equated mathematics with the algebraic way of operating. For Spencer-Brown, this is a great step backwards, which atrophied mathematics, mathematical skills and the joy of mathematics.

What is meant by theorems is best illustrated by the example given by Spencer-Brown: Euclid proved the theorem that there are infinitely many prime numbers. His proof was: If there are only finitely many prime numbers, there is one largest prime number. Its name would be N. If the product of all prime numbers from 1 to N is formed, the result is ›M = 1 · 2 · 3 · 5 · 7 · 11 · … · N‹. For M it can be shown that ›M + 1‹ is a prime number. Therefore there is another prime number that is greater than N, and so on. This idea cannot be deduced as a consequence of the arithmetic rules, but had to be found. Two things are important for Spencer-Brown: The proof shows both an original idea of proof and a procedure with which the idea of proof is presented. Most people will only be convinced of the procedure, why the sentence is correct, and understand the idea of proof from it. Such an idea of proof results from the arithmetic typical way of calculating, which has a deep understanding (not to say compassion) of the numbers and their inner rhythms and infinitely diverse relationships and kinships. No computer can do that. Computers, on the other hand, provide a different kind of insight, which for Spencer-Brown belongs to algebra.

– Spencer-Brown had started from the two-element Boolean algebra and had sought the arithmetic which precedes it and whose algebra it is. Usually we learn arithmetic from natural and real numbers and then all the algebraic rules how to calculate with them. George Boole (1815-1864) had found a simplified algebra in 1847, which calculates with the elementary logical operations AND and OR and whose variables in the simplest case only stand for the two numbers 0 and 1 (or in the Boolean algebra for 'on' and 'off'). This was Spencer-Brown's starting point and he wanted to go one step further: Just as the arithmetic of natural and real numbers underlies the complex theories of number theory and analysis, it should be possible to find a simplified arithmetic for Boolean algebra which is only formulated for the numbers 0 and 1. As primary arithmetic (proto-arithmetic), it precedes the usual arithmetic just as much as Boolean algebra precedes ordinary algebra. In this way, he succeeded in developing an arithmetic whose character set is further restricted by the two characters cross ⃧ and ock Ɔ, and which therefore precedes Boolean algebra.

– From Spencer-Brown's point of view, Gödel's groundbreaking statements can be read differently and anew: Kurt Gödel (1906-1978) showed in 1931 that algebra is no longer able to say everything that is possible in the arithmetic that precedes it when a certain complexity of numbers is given. Arithmetic, with the individuality of its signs, contains a surplus that cannot be completely grasped and captured by any algebra. Compared to arithmetic, algebra is fundamentally incomplete. However far algebra may be developed, arithmetic and the infinite stock of its individual numbers can provide new insights that go beyond the known algebra. – When Spencer-Brown speaks of completeness in Chapter 9, he means something else. Theorem 17 says: »The primary algebra is complete« (LoF, 50). This does not mean, however, that it contains everything that can be obtained in arithmetic discoveries, but only that every arithmetic discovery can also be formulated algebraically. »That is to say, if a = ß can be proved as a theorem about the primary arithmetic, than it can be demonstrated as a consequence for all a, ß in the primary algebra.« (LoF, 50) Spencer-Brown therefore deliberately speaks of a theorem and not of a consequence, i.e. of a statement that can be proved with the methods of arithmetic and not with those of algebra.

»So, to find the arithmetic of the algebra of logic, as it is called, is to find the constant of which the algebra is an exposition of the variables--no more, no less. Not, just to find the constants, because that would be, in terms of arithmetic of numbers, only to find the number. But to find out how they combine, and how they relate -- and that is the arithmetic. So in finding -- I think for the first time, I don't think it was found before, I haven't found it -- the arithmetic to the algebra of logic -- or better, since logic is not necessary to the algebra, in finding the arithmetic to Boolean algebra, all I did was to seek and find a) the constants, and b) how they perform.« (AUM, Session Two)

– An example of calculating and proving from proto arithmetic

»Theorem 1. Form The form of any finite cardinal number of crosses can be taken as the form of an expression.« (LoF, 12)

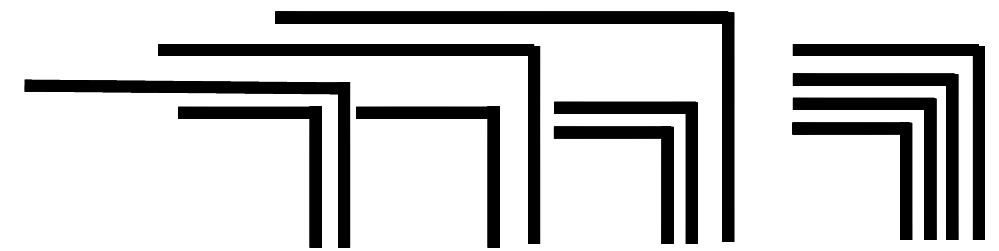

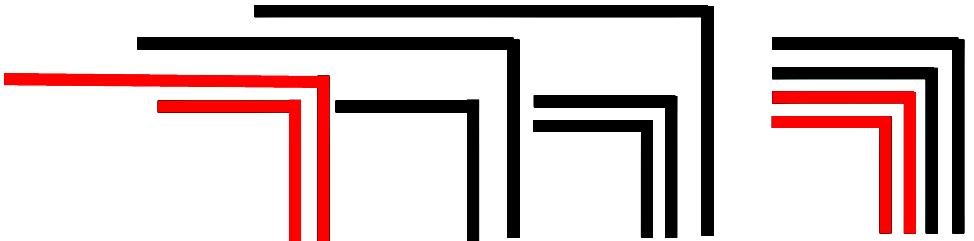

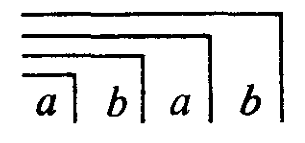

The statement is hardly to be understood directly in this choice of words. It means that any form constructed from a finite number of crosses and ocks can be converted into either a single cross or a single ock. This statement can also be formulated differently: Any shape, however complicated, composed of crosses and ocks, can be considered as a whole form. This can be illustrated by an example that Spencer-Brown later cites for the 3rd theorem (LoF, 17):

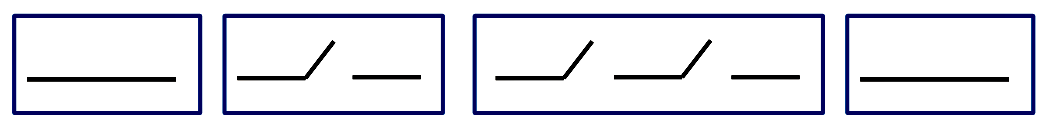

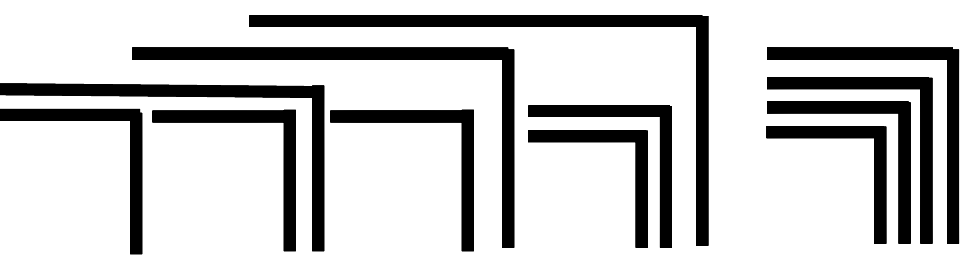

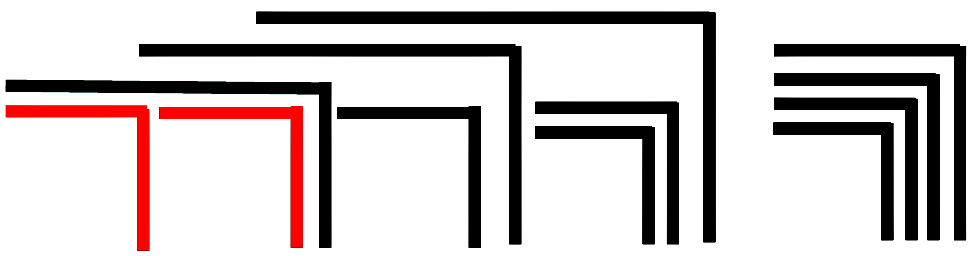

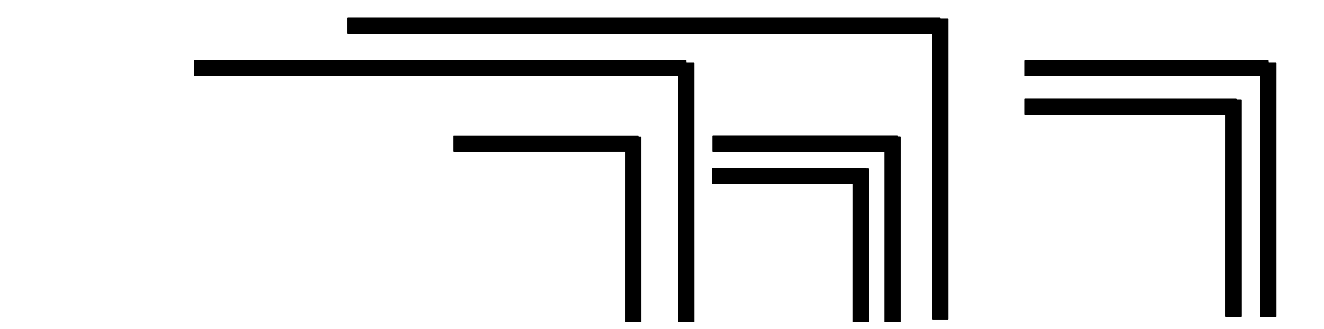

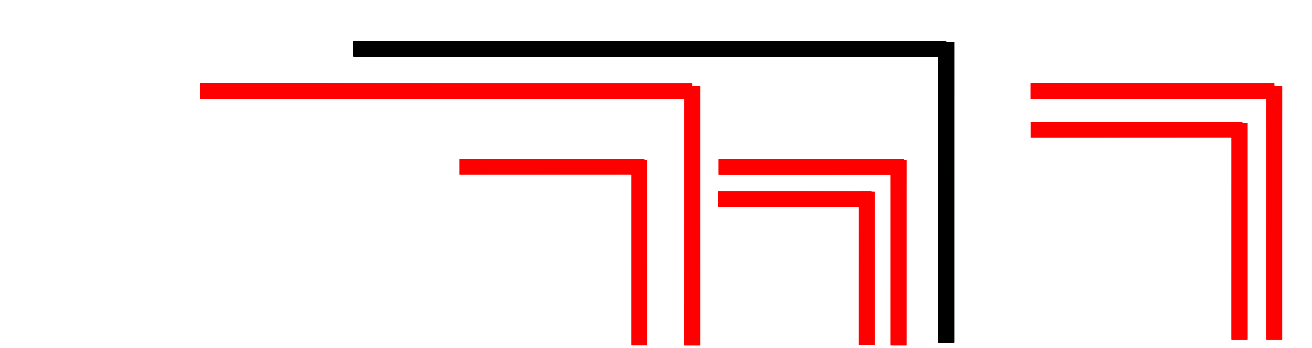

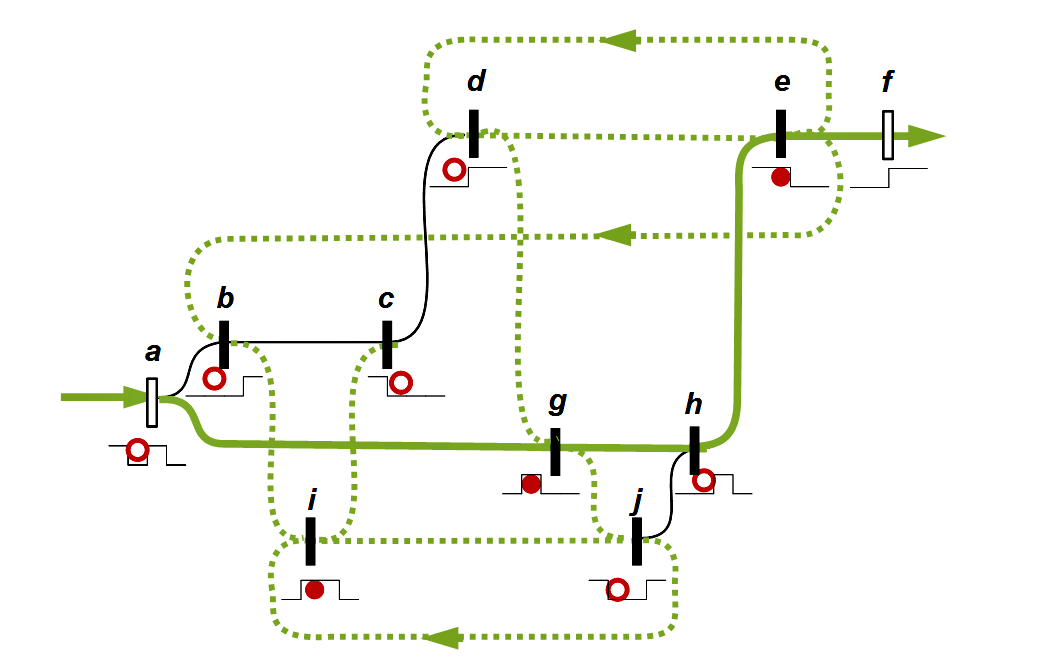

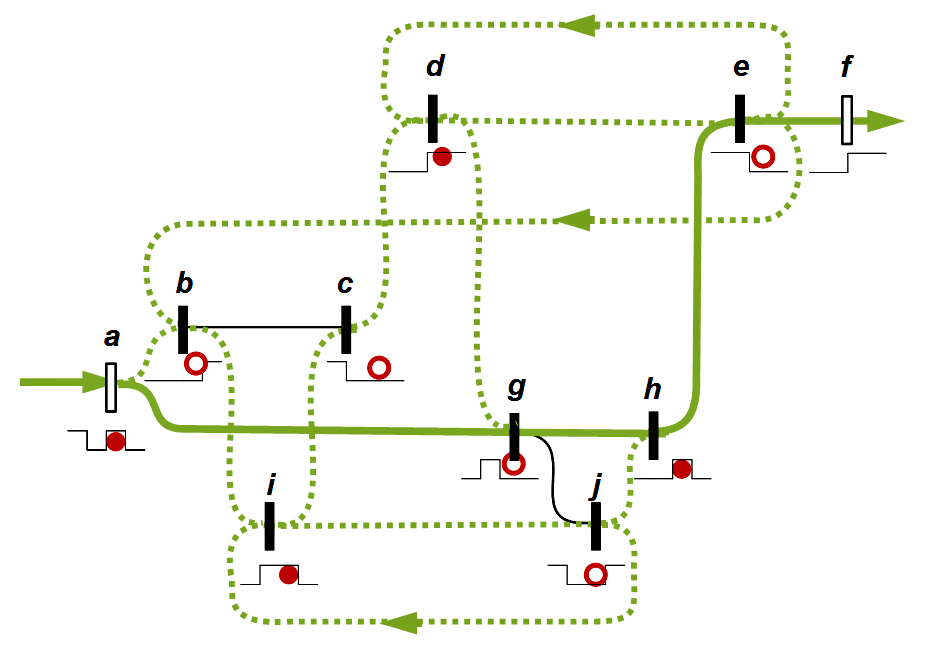

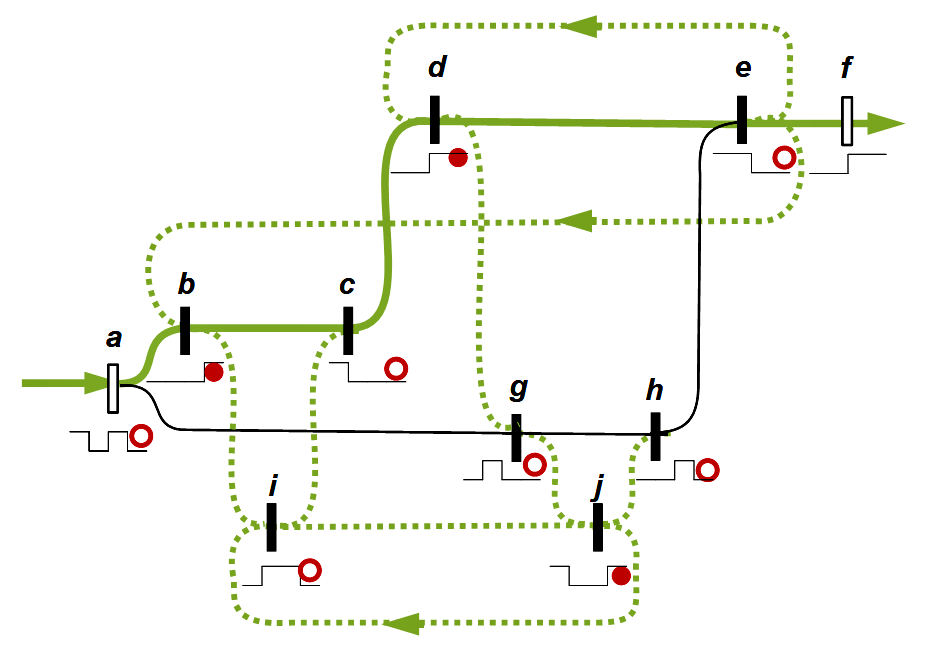

It is shall be shown how this figure can be reduced to a cross or an ock. The idea of proof is to go to the deepest nesting level. There are only two possibilities: Either a cross repeats itself several times, or two crosses are nested into each other. If it repeats itself, it can be condensed into a single cross according to axiom 1. If two crosses are nested, they can be converted into an ock according to axiom 2 (cancellation). The example also gives an impression of how the proto-arithmetic calculates. The crosses that can be contracted or deleted in the next step are highlighted in red. The character ⇀ introduced by Spencer-Brown is used for the conversions.

A comparison with networks shows how important this result is. If you have a confusing network and want to simplify it in a similar way, deadlocks can occur where any further simplification is blocked, see as an example the possibility of deadlocks in petri nets. This is not possible in the calculation chosen by Spencer-Brown. Each complex character can be converted to one of the two basic characters.

– Successor ordinal

The main difference between proto-arithmetic in Spencer-Brown and the usual arithmetic is in the successor ordinal and the mathematical induction. All natural numbers n have the property that they have a successor n + 1. This means that the natural numbers can be arranged on the number line. Multiple execution of the successor relation leads to adding, and from this result the further arithmetic operations and with them the extensions of the natural numbers by the negative and rational numbers and so on. The induction-axiom says: If a statement A is valid for an initial element n₀ (beginning of induction, base case) and if it can be shown that it can be concluded from A(n) to A(n + 1) (induction step), then it is valid for all natural numbers.

However, Spencer-Brown does not even ask the question about the arrangement of all characters of proto-arithmetic. In a first attempt, the characters resulting from the repetitions of the crosses can be arranged like the strokes on a beer mat:

|, ||, |||, …

⃧ , ⃧ ⃧ , ⃧ ⃧ ⃧ , …

But there is also the nesting of the crosses. While the multiplication and the higher arithmetic operations can be reduced step by step to the addition, it is not possible to derive the nesting from the repetition. Both are independent. Instead, it is reasonable to choose a lexicographical (canonical) order for the arrangement of all characters considered in proto-arithmetic, as for two independent letters a and b:

a, b, aa, ab, bb, ba, aaa, aab, …

In this order, there is no simple successor relation that can be used to describe the relationship between the following character and its predecessor. Correspondingly, there is no simple linking rule with which a new character can be formed from two characters. Bernie Lewin, however, in Enthusiastic Mathematics, has explained numerous further possibilities of the arrangements of the two symbols of Spencer-Brown's calculus, which go back to the original ideas of Pythagoras and his school, and has shown how they can be used to derive the operations of the usual arithmetic. His book also shows that arithmetic has not only an inexhaustible wealth of ideas of proof and sentences, but also of symbolic and graphic signs with which new ideas of proof can be created.

– Opacity and transparency of arithmetic and algebra

The relationship between arithmetic and algebra raises far-reaching philosophical questions: Arithmetic is unique and opaque (dark, blurred, impervious to light), algebra is transparent and translucent. Within algebra, everything can be derived from the given basic rules, which Spencer-Brown calls initials. For him, the algebraic derivations are not proofs with their own ideas of proof, but just consequences which result formally from the initials and which can also be derived by a computer. In arithmetic, on the other hand, there is an infinite abundance of possibilities which can never be fully exhausted by a finite being like man and his art of arithmetic. There are always still unknown possibilities that can be discovered by new coming mathematicians. This corresponds to the statement of Gödel's incompleteness theorems: Arithmetic will never be able to fully describe all the possibilities it offers. Thus it always contains something that remains hidden (opaque) for algebra.

Arithmetic is used to recognize value charts and their underlying rules. The simplest case is the axiom of natural numbers, according to which two adjacent natural numbers are separated by a distance of 1. On the other hand, it is still unclear what efficient formula for prime numbers exists. Euclid proved that there are infinitely many prime numbers, but nobody knows how their distances evolve. One of the most researched and yet unproven mathematical assumptions comes from the mathematician Bernhard Riemann (1826-1866), who postulated an important estimate for the distribution of prime numbers based on imaginary numbers in 1859.

The prime numbers and their properties are the best example of what Spencer-Brown means by the opacity of arithmetic. Despite all the advanced methods of mathematics, no solution is found. For Spencer-Brown, this is an indication that it will never be possible to completely overlook the abundance of arithmetic. He brought this into the unimposing statement: »Principle of transmission: With regard to the oscillation of a variable, the space outside the variable is either transparent or opaque.« (LoF, 48). The term »oscillation of a variable« refers to the value progression of prime numbers, for example.

This understanding has radically changed the relationship between mathematics and philosophy. Since Descartes, modern philosophy has been convinced that it must be possible for a completely transparent method to see through all things. It is the task of philosophers to develop this method, and things were assumed to be transparent in principle, i.e. metaphorically speaking, not to resist the light of a superior method. Perhaps, it is even in the history of nature that man's task is to find such a method and to free things from their concealment. In a way, they are waiting to be released from their darkness by man.

The opposite position goes back to Heraclitus, from whom the aphorism is preserved: »Nature loves to conceal Herself« (Fragments, B 123, others translate: »Concealment accompanies Physis«). While since Descartes the philosophers have expected support from mathematics to find a method with mathematical rigour that opens everything up, it is Spencer-Brown who reverses the relationship. For him, the relationship between opacity and transparency is not only repeated within mathematics, which is why mathematics cannot simply be taken on the side of transparency, but for him it was fatal and a radical paralysis of mathematical creativity to seek only what is transparent in and with mathematics. For him, the triumph of the mathematized sciences since the 19th century has been like a house of cards that immediately collapses when one looks at how mathematics works inside. It has lost its vitality for him with the dominance of the mere derivation of consequences in an algebra. For him, the Principia Mathematica by Russell and Whitehead, published in 1910-13, are an example of this way of thinking.

Kant was the first to question Descartes' prospects of success with his doctrine of the thing in itself, but he left open the question of whether the unfathomability of the thing in itself can nevertheless be determined paradoxically. For him, mathematics stood on the side of transparent science and was the guarantor of reason. Whenever he wanted to describe the freedom of reason, he reverted to images from mathematics, e.g. in his idea of a sphere of the concept that can be overlooked from a certain location and is thus transparent (KrV, B 886). However, he recognized that this is only true if the sphere is not curved. If knowledge is arranged on a curved surface, there is no point from which it can be completely overlooked. The curvature of the point prevents one from seeing what lies beyond the horizon. Therefore, there are good reasons to understand curvature as the elementary property of the opacity of things.

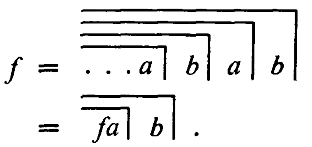

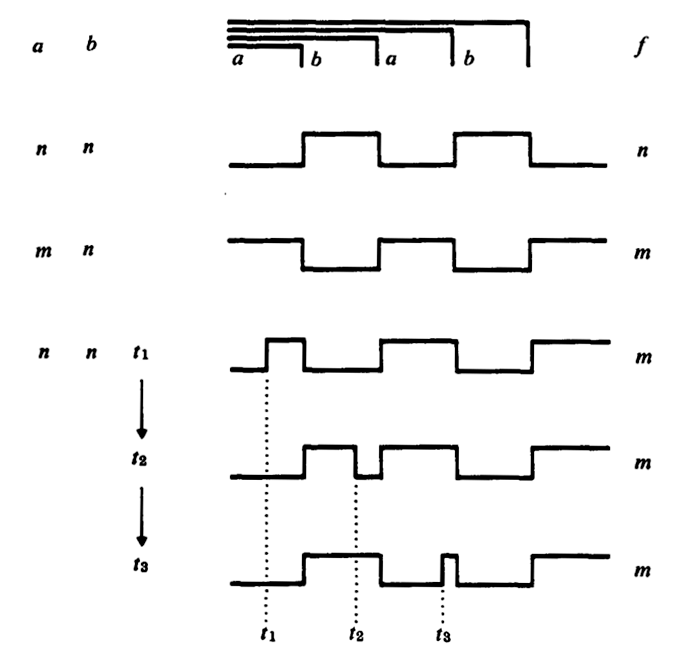

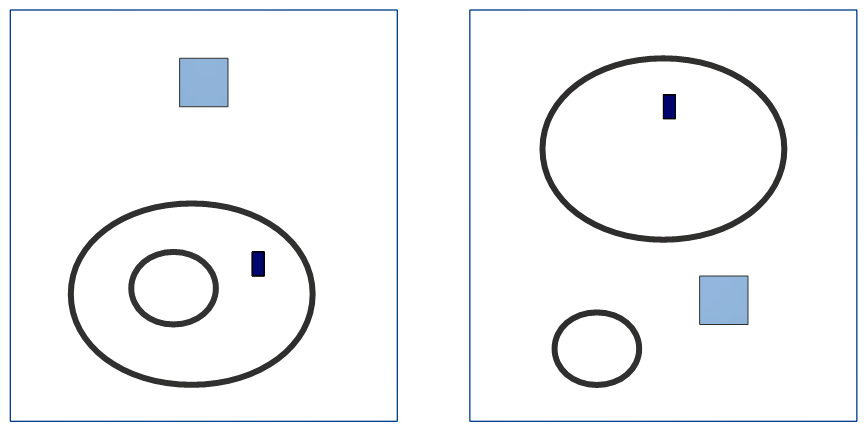

In the sense of Spencer-Brown, arithmetic is located on a curved surface, and with algebra you can never overlook everything that exists on the curved surface. Nothing else remains to be done but to change one's position on the curved surface. Whoever moves on the curved surface may open up new areas that become visible, but with the same movement other areas withdraw again and disappear behind the horizon. Never everything can be overlooked.